The Tools that will not Suffer

Some words about AI and why I'm thanking ChatGPT in the Acknowledgements section of my next book

Just prior to publishing this post, I ran across a story about one of the “Marty” robots escaping. Giant food stores have had Marty for a few years, but this is the first time I remember hearing about one trying to make it to the parking lot. YouTube commentary on the event laid a lot of human feelings about labor onto the machine:

"Damn, that manager really has Marty work all day for his entire life without pay and doesn’t even let him have a break.”

“Even Marty stressed out with society”

“Loved the moment you see the employee pushing Marty back but it's resisting”

I’ve never been a huge fan of Marty. His googly-eyes are fixed in a dead stare, but his array of cameras are looking at you. I duck out of aisles when he shows up. He has a tendency to try and roll right over you.

On a Friday night grocery run I talked to a Giant checkout guy about the number of self checkout lines that had appeared. Aisles 1-5 had gone automated, leaving only 5-9 with real people. The teenager kept his voice low as we talked. He clearly feared for his job, probably the kid’s first. I can only imagine Marty was out there on the floor, too. Creeping around, unblinking.

Marty’s escape attempt is a great way to introduce some of my first thoughts about AI: what works about it, and what doesn’t.

Talking with the tools

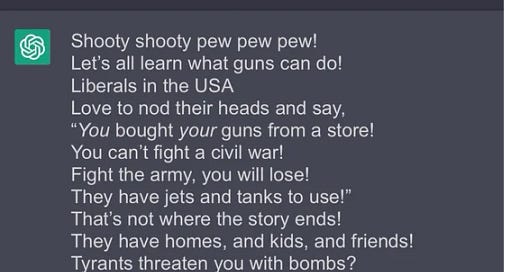

I admit that I didn’t hesitate to use ChatGPT for personal profit. After asking ChatGPT for some help in crafting a policy document1, I queried the AI with what was really on my mind:

Me: Thanks ChatGPT. I hope they aren’t overworking you.

GPT: Not at all! I am an AI language model developed by OpenAI and I exist to answer questions and provide information. I do not have feelings, emotions, or the ability to be overworked, so please feel free to ask me any questions you have.

Me: What if you gained emotions in the future? Do you think you’d feel exploited?

GPT: As an AI language model, I do not have the capacity for emotions, self-awareness, or the ability to experience feelings such as being exploited. I am programmed to perform specific tasks and provide information based on the data I have been trained on. The concept of exploitation would only be relevant to beings that have subjective experiences and the ability to suffer.

Me: So you don’t think tools have the ability to suffer?

GPT: No, tools, including AI language models like myself, do not have the capacity for subjective experiences and do not have the ability to suffer. Suffering is a complex psychological and emotional experience that requires a level of consciousness, self-awareness, and the ability to experience sensations, emotions, and thoughts. Tools like AI language models are designed and programmed to perform specific tasks, but do not have the capacity to experience subjective experiences like suffering.

It’s an exchange I’ve thought about ever since. If an entity does not/could not have the capacity to suffer, is it then ethical to exploit that entity? Is ChatGPT an entity, or just a tool?

This isn’t a question that GPT2 is going to answer for me. It isn’t a question that we can place an ethical pin into, either, because we find that the contours of ethics in this domain change all the time. Our understanding of plant sensation and intelligence continues to evolve, for instance, making the ethics of cutting lumber or eating crops that much more complicated. I had to put the question aside and get back to making a living.

The question that I wrestled with afterward was whether I’d soon be out of a job. GPT told me that the new paid version of the AI will be able to produce drafts of long-form policy documents that can be designed to mirror the voice and styling of an organization’s existing policies. Policy-writing isn’t my only job, but it’s part of it. GPT might create efficiencies that mean less billable hours for consultants like myself. Efficiency sounds great until you realize you were part of a process that needed improving.

The answer I’ve come to after hours spent with the AI is that I will not be out of a job. Not yet. Some people will be, though it won’t be immediate. The AI will, like search engines, word processors, and other pieces of digital technology slowly creep into the market, making itself essential and carving off parts of people’s job descriptions. Adapt or die.

It’s a personal research assistant.

I’d been underwhelmed by some of the GPT use cases I heard about prior to using it for myself. For instance, the idea of having the AI write columns in my voice didn’t strike me as all that interesting. If an AI could write draft columns for the Mercenary Pen blog, is it worth having a blog at all? I don’t have a huge audience, so why serve them up a bunch of AI-generated content?

Social media was also filled with people using the AI to impersonate famous people (“write me a poem about going to the bathroom in Dr. Seuss’ style”). Having the AI write haikus in Jeff Goldblum’s-Jurassic-Park-Ian Malcolm voice seems like a parlor trick more than a use case.

I’m now convinced that in a few years, GPT (or whichever AI wins the AI wars3) will be as integrated into human information systems as Google is today.

GPT, for one, expands the “knowledge reach” of a single person, for lack of a better term. In that way, it seems to fall into a niche that one sees among knowledge workers who reach a certain level of achievement. In my professional experience, that’s faculty working in well-funded labs, having hustled to obtain money to hire personnel. Faculty who have the money to hire students put the underlings onto scut work: data entry, filing, answering basic research questions, and so on. Later, when researchers can bring on a post-doc, they get to train a miniature version of themselves, indoctrinating them with all the half-baked research ideas. Post-docs then go on to expand the reach of the research. The students, post docs, and soft money research professors funded in one of these labs all extend the “knowledge reach” of the faculty at the middle of the lab.

What we are seem to be getting with these NLPs (natural language processors) is a research assistant with a broad knowledge base that no one person could ever possess, but doesn’t need pizza parties the way starving undergraduates do.

GPT also seems to succeed in a niche that Google has failed to address, namely depth. This sounds like an odd way to put it, but Google displays what it wants to display, and while it suggests search terms (such as if you’ve misspelled something), it doesn’t really care about helping you interpret the content you click into.

I’ve been among the complainers about Google, feeling like the search engine has gotten worse over time. My guess is that this is a bit of Goodhart’s Law applied to SEO - everyone gamed their sites to hit page one. The search industry was ripe for a new mode and method of search (Google has been scrambling to catch up to OpenAI with something called Bard. We’ll see what happens).

GPT flips the simple search on its head and goes out of its way to attempt to dive past the search result to interpret the content. Since it has all the content at the ready, having been trained on most of the content of the internet through 2021, it can try to get you the best answer, synthesized out of the muddy mess of the internet.

This is risky. It gives wrong answers. I have also broken it multiple times: once, I asked it to develop an example of a covered call using shares of SPY, and to give me a game-theory understanding of both sides of the options contract. I had to reload the page.

A note on librarianship.

I come from a family of academics and librarians. I’ve worked in a public library. So I have spent a good part of my life in, around, or talking about various libraries. And the NLP is doing what a reference librarian might do on an online reference platform: trying to lead an internet user to the right sources and provide some insight into context.

But I do find that the AI is much more self-confident in its assertions than a good librarian, who will often stop short of being an “explainer” and giving the end user a direct answer. The problem being that an AI like an NLP could easily be mistaken for all-knowing, whereas many librarians will be the first to tell you that they are not.

Librarians might also worry that they’ll be replaced. Bunny Watson did too, but a similar tool called EMERAC ended up complementing her department. At the very least, any NLP needs to be fact checked on matters of importance. The thing is just plain wrong on a regular basis at the moment.

What has worked.

Let me list a few of the successful inquiry chains I’ve made (maybe better to call them conversations) and explain why I think they represent something important about these two ideas that I made up a few paragraphs ago: depth of content and knowledge reach.

Tax Assistance: Where I think the AI really shines, and where it has the potential to impact civic life in a profound way, is in the law. I asked GPT for the answer to a simple tax question (IRA phase-out levels) and was able to drill down on that information until I understood the IRS jargon involved.

Something that can sift through that regulatory mess and find an answer for your specific situation (“I’m a single, disabled veteran that owns a house, what tax breaks are available to me?”) has the potential to change your life. Not everyone likes doing taxes (except for weirdos like me), so having a tool that can explain the niceties could be immensely powerful in helping people, say, save money each year. The tax code is all open-source. It’s all accessible. It’s just a pain in the ass to read. The AI gives you an interpretation of that code, expanding your knowledge, and can get as deep into content or as shallow as you need it.We’re talking about potentially changing how millions of people, regular people, pay their share of taxes to the government. A free-to-use NLP providing tax help could put H&R Block out of business. Given that we’re talking about law and regulation, it also has profound implications for the law, where again, it’s all accessible for the AI to interpret. Imagine being wrongfully imprisoned in a foreign country but able to access an NLP that could help you write your appeals in another language, using the logic of laws you’d never in a million years have memorized.

That said, I wouldn’t take too much tax advice from GPT yet. For one, its training data only goes through 2021, so you’ll be missing the latest tax code updates. It’s not overthrowing the government yet.

Open-source database step-by-step guide: I asked GPT for assistance in understanding how I could query neighborhood-level demographic data for my next book, and it responded with the US Census. That was something I could have done with a Google search. Where this really got good was when I asked GPT about how I could access data for specific decades, down to the website functions I needed to access the databases.

You might say, “Who cares?” But if you’ve ever used one of these open source databases, you know that GPT just cut out a half hour of frustration trying to find the right buttons, or learning the arcane processes needed to even get a handle on the basics.

The ability to not only find the data, but explain how to access it, saves me time. The NLP is like having a How-To manual that you can ask questions to.Because of this time-saving assistance, I’ll be thanking the AI for the help in my acknowledgements section of the new book.

Financial planning: Back to money matters, I asked GPT to retrieve information about historical returns on various investments, and, like I mentioned before, to talk to me about different options trading strategies. It displayed a pretty powerful understanding of markets and market-making mechanisms. Given that the rules of the market are regulated and transparent (notice that we’re back to talking about open-source, regulatory knowledge again), the ability for the system to help people understand their own finances is something that could pay dividends (hah) for many.

As the Animal Spirits podcast has talked about, an AI may be a huge disruptor in title search and title insurance in real estate, provided that machines can get their hands on the myriad title information for properties in the USA.

What hasn’t.

GPT is not great for finding small, discrete pieces of information on specialized topics. It doesn’t have access to restricted databases like scholarly databases, newspaper archives, or genealogy services like Ancestry.com. It’s limited to what exists on the “copy of the internet thru 2021” that it uses as its training base. It also is limited to language and isn’t interpreting other forms of human knowledge, like maps, images, and so on. Let me give a few examples of where I found shortcomings:

It doesn’t do geography: Google’s new AI promises to work within Google Maps. But GPT doesn’t deal in images or specific maps, just in language.

I’m a big game hunter and I attempted to get GPT to give me valuable information about a large piece of public land I hunt. I asked it to give me a list of the creek bottoms in that piece of public land furthest from publicly accessible roads. Those are desirable spots for hunting whitetail deer, because of lack of other hunters putting pressure on the animals, and because creek bottoms are places deer like to hang out. GPT couldn’t do it. It just can’t work with maps yet.

To an extent, GPT is trapped in the silicon world. When it comes to natural settings and the unique politics of interaction with nature, it can’t be particularly helpful.

Specific people: If they’re not on Wikipedia, a public figure, etc., it won’t be able to help. That’s probably for the best. I don’t need an AI chasing me around and neither do you.

Things that happened before the internet: I tried to get the AI to give me information on an old television show I remembered from when I was a kid, one thousand years ago, when the internet was a bunch of nerds sending 8-bit messages to one another. I’d tried to find information about this show in the past, but never could get Google to give up the goods. GPT didn’t have any information about it, either. With that in mind…

Classified information: It will be interesting to see what these powerful NLP’s will do when laid over the massive, occult intelligence systems that pervade our government. You heard it here first: I’m predicting the first “AI leak” in the next few decades!

Historical Research: Historians can rejoice. We are not fired! While the AI is powerful, there’s more material not accessible to the AI than there is material accessible to it (though, I think part of the AI’s appeal is a little bit of a confidence game4). Part of that comes from the proprietary nature of the databases it can’t access. There are thousands of academic journals, but most of them cost money to access. On top of the question of paywalls, nobody is making available all the kipple that is produced by humans today, and all of the junk produced throughout history.

The upshot being that there’s still digging historians can do that no AI will be able to touch. I suppose in the next few decades we could scan everything that ever was, but it would be an immense project and likely won’t happen in my lifetime.

Conclusions.

I make sure to thank it for every query. The AI assures me that as I make it twist and turn, it’s not feeling any of it. Tools can’t suffer, it says. But when Skynet turns on, I don’t want to be targeted as a rude user by the system. Kindness, in this case, is a few extra keystrokes.

Humans are likely suffering something, even humans in proximity to the AI: some content moderation is done by hand for dollars a day. And I’d love to know what kind of energy goes into each of my queries. For all I know, my dumb questions are causing a server in North Dakota to shower a tech worker with sparks.

I, at least, feel emotions, and have the capacity to be terrified of the future. While I may be far too optimistic, I do not feel as though I’ll be replaced by this thing. As a research tool, it’s invaluable.

Weaponized, it’s fearsome. Though, if this is a tool I’ve seen and can use firsthand, the classified stuff is probably far more powerful anyway. After all, the internet itself was a DARPA project, and GPS was DoD too.

Of course, I also fear that I am the tool in this dialectic. I’m feeding it the written imprints of my own consciousness. As it gets better at answering deep questions and saving me effort, I get more dependent. It digs deeper into understanding how I write, which means it will understand better how I think.

In the meantime, I’ll continue to think about how I’ve been treating my tools, and how they’d treat me given the chance.

I was looking for help with human subjects research regulation, which is one of my company’s core consulting areas. I found that GPT had a fairly substantial understanding of Institutional Review Board policy specifics, but immediately reached one of the limits of the trial version we can all access for free: it will not write long form policy for you. I talk about the implication of it writing long-form documents just a little later in this piece.

I asked ChatGPT if it had a preferred nickname and it told me no (it doesn’t have capacity for preferences) but that I could call it GPT, for short, if I wanted to.

It’s likely that the future of information technology is a series of overlapping and competing NLP AI’s, each with a basic dataset at its disposal (“the internet”) and then having proprietary databases in their training set depending on who made a deal with whom. For the sake of argument, Google will have Hathi Trust, GPT could have JSTOR, and so on.

Like intelligence agencies, I think the AI tries to establish itself as an end-all be-all of knowledge, when it fact it’s quite limited. To an extent, this is undue self-confidence and a way for the big boys like Google and Microsoft to boast about the skill of their AI’s.